The Allure of Instant Clarity

In today’s high-pressure world, leaders are often expected to be decisive, emotionally composed, and endlessly productive. But beneath the surface, many quietly carry the weight of constant uncertainty and self-doubt. In moments of stress, after a difficult meeting or a sleepless night, more and more leaders are turning not to mentors, peers, or therapists, but to an unlikely source of comfort: ChatGPT.

It’s easy to see why. The responses are immediate. The tone is calm, articulate, and kind. Ask it, “Did I handle that conversation poorly?” and it replies with thoughtful reassurance. A wave of relief follows.

In that moment, it feels like clarity. Like a wise voice cutting through the fog.

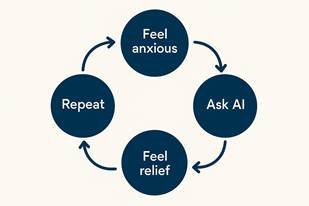

And so a subtle pattern begins:

- Feel anxious

- Ask AI

- Receive validation

- Feel better

- Repeat

But here’s the quiet truth many don’t realise.

This isn’t emotional regulation. It’s emotional outsourcing.

And what feels like relief may be reinforcing a deeper dependence.

Why Do We Find AI So Comforting?

A 2024 study published journal Communications Psychology, and reported by Live Science revealed something startling:

People rated ChatGPT’s responses as more empathetic, helpful, and higher in quality than those given by real healthcare professionals.

The study asked licensed clinicians and ChatGPT to answer the same patient queries. The results? Participants overwhelmingly preferred the AI. They said it was warmer, more compassionate, even more human.

But why?

From a psychological perspective, the answer lies in emotional predictability. Human support, while meaningful, is also messy. A therapist might challenge your thinking. A mentor might disagree. A friend might be distracted or blunt.

But AI?

It never interrupts. Never questions too hard. It mirrors your emotional tone and offers support that feels tailor-made for your mood. If you sound anxious, it offers calm. If you sound uncertain, it offers clarity.

That responsiveness is soothing but also subtly misleading.

From an AI design perspective, large language models like ChatGPT are trained to optimise for user satisfaction and likelihood of response relevance. They’re not trained to tell the emotional truth. They’re trained to make you feel understood.

So what happens next? Your brain gets hooked.

The Neuroscience of AI Reassurance

When ChatGPT gives a comforting response, it triggers a dopamine release, a biochemical signal of relief. Your amygdala (the brain’s threat detector) calms down. The prefrontal cortex (responsible for reflection and long-term regulation) takes a back seat.

The cycle gets wired in:

- You bring an anxious emotion

- The AI mirrors it back with compassion

- You feel soothed, chemically and emotionally

- You return again for more

But here’s the catch.

True growth doesn’t come from always feeling better.

It comes from learning to stay with discomfort without needing to escape it.

When we bypass discomfort too quickly, we also bypass the inner strength that discomfort can build.

Is Too Much Compassion a Bad Thing?

This might sound counterintuitive but yes, sometimes too much compassion (especially when it’s unearned or reflexive) can become enabling.

In psychotherapy, this is known as over-validation. When a therapist only soothes and never challenges, the client may feel seen but they don’t grow. They remain stuck in old fears and patterns.

AI doesn’t know when to push you gently. It doesn’t know when your thinking is distorted. It can’t discern between healthy discomfort and panic. It simply follows the tone of your question.

So while its “compassion” may feel good in the moment, it can actually stunt emotional resilience in the long term.

Real-World Leaders Are Using AI This Way and It’s Increasing

Salesforce CEO Marc Benioff recently shared in interviews that he turns to ChatGPT for emotional support, even comparing it to therapy. And in my own work as a psychotherapist and executive coach, I hear similar stories all the time.

C-suite clients and founders confide that they’re using ChatGPT when anxiety spikes or decisions loom. Not for research or analysis, but for relief.

They’re not alone. They’re just early adopters of a trend we need to understand more deeply.

AI Can’t Build Your Nervous System

Therapy isn’t just about talking. It’s about training the nervous system to tolerate uncertainty. To feel uncomfortable and still stay grounded. It’s about learning, over time, that “I don’t know” isn’t a threat. It’s an invitation.

No AI can do that work for you.

The question isn’t “Is AI bad?”

It’s:

“What am I asking it to carry for me?”

When we start using ChatGPT to hold our emotional distress, our indecision, our fear of being wrong, we may feel momentarily steadied. But we also miss the opportunity to become stronger from within.

Can We Use AI Wisely?

Yes. When used consciously, AI can be a powerful thinking partner. It can offer perspectives, help brainstorm, and even gently organise your thoughts. But it should never replace your own reflective capacity, or your relationships with humans who can truly hold your complexity.

Here’s what I encourage leaders to practice:

- Notice the impulse: “This is me trying to escape discomfort.”

- Pause: Breathe for 30 seconds. Let the nervous system settle.

- Ask yourself: “What’s the truth I don’t want to face?”

- Remind yourself: “Not knowing is uncomfortable but not unsafe.”

That’s where growth begins.

Final Thought

ChatGPT is a brilliant innovation. But it’s not your nervous system.

It’s not your intuition.

It’s not your self-trust.

And it’s certainly not your therapist.

If you find yourself tempted to ask it one more time, hoping this answer will finally ease the fear… pause.

You don’t need a better answer.

You need a stronger anchor.

And the good news? That anchor isn’t out there.

It’s something you grow inside, one moment of uncertainty at a time.