It all started with the release of ChatGPT in Nov 2022, which proved to be a game-changer. Following that, Elon Musk & top AI researchers asked the world to put brakes on the “giant AI developments”, calling it dangerous and asking regulators to define safety protocols for future AI systems and ensure the deployment is safe, citing potential existential risks to society and humanity. The non-profit Future of Life Institute issued the open letter, signed by over 1,000 people, including Musk.

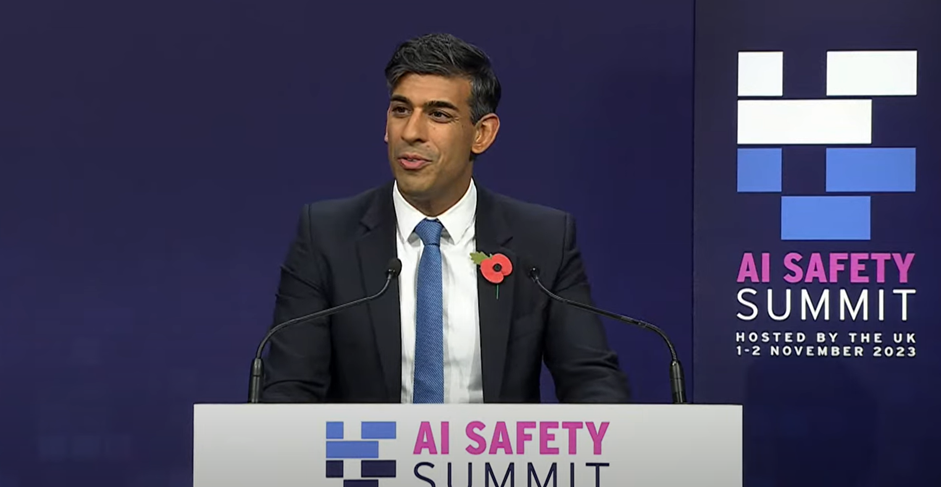

This sparked up strong reactions from world leaders. Rishi Sunak was one of them to welcome this threat, identify opportunities to define regulation & governance and enforce guardrails for AI. Sunak positioned the UK as the leader in defining AI Safety and governance and came up with solutions to rein in the power of big tech companies that develop these complex Large Language Models before it’s too late. Precisely a year after ChatGPT was released, this AI Safety Summit was held at Bletchley Park, England – the birthplace of modern computing and the site of the British code-breaking operation in World War II led by computer science pioneer Alan Turing.

Summit Overview

Nov 1-2nd was organised for the AI summit, a passion project involving 100 world leaders, technology executives, computer scientists and academic experts. The US, UK, Australia, China and the EU were among 28 signatories to a declaration announcing the “potentially catastrophic risk” that artificial intelligence poses to humanity. They prioritised the immediate risks from AI, such as bias and misinformation over concerns that it could end the human civilisation.

The agreement is a list of pledges to ensure AI is “designed, developed, deployed, and used in a manner that is safe, trustworthy, responsible and remains human-centric.” Signatories also committed to working together through existing international forums to promote cooperation on addressing the risks of AI across its lifecycle, including identifying risks of shared concern, building understanding and developing policies. However, each nation can classify and categorise AI risks based on their respective national circumstances and legal frameworks.

Rishi also announced that the biggest tech companies will, for the first time, allow governments to examine their AI tools, which he says will slow down the development of AI systems that can compete with humans. Threats ranged from AI-enhanced cyber-attacks that can learn to defeat cyber defence technologies to advanced autonomous weapons that could be programmed to hunt and kill humans.

The first formal international agreement on developing a framework around safe AI (aka Bletchley Declaration) was signed by 28 countries, including the U.K., EU, U.S., India and China. The Bletchley Declaration will call for global cooperation in tackling the risks, including potential privacy breaches and the displacement of jobs. “The Bletchley Declaration stresses safe, responsible AI development, urges international cooperation to mitigate risks and promotes global benefits.”

The big breakthrough was the creation of an agreed-to framework around the nature of AI’s risks. It doesn’t define an actionable plan in detail, however it mobilises the world leaders at this forum to get a unanimous consensus on a principal approach towards solving future catastrophes.

What does the USA bring to this Summit?

A week before the Summit, President Biden issued an executive order requiring all tech firms to submit their test results wherever they’re building powerful AI systems that pose risks to U.S. national security, the economy, public health or safety to the government before they are released to the public. The US vice-president, Kamala Harris laid out actionable plans for the US government, including setting up their own US version of AI Safety Institute that would govern artificial intelligence. Harris also indicated that the US already has the blueprint for those plans.

Conversation outcome with Elon Musk

This statement from Musk made rounds across the internet – “There will be a point where no job is needed; AI will be able to do everything. You could have a job if you wanted to have a job for personal satisfaction, but the AI will be able to do everything.” Musk highlighted the potential benefits of AI while simultaneously issuing stark warnings about “humanoid robots” and predicted that there would be no jobs as AI would have taken them all.

The earlier statement from Musk still prevails: “The possibility that AI can wipe out humanity” – this view has always remained divisive in our tech community. At the same time, other scientists believe the human connections will continue to foster within healthcare, hospitality, teaching and many other sectors where there won’t be any major workforce displacement in the foreseeable future.

Wrap-up

This Summit was a critical step in the right direction towards seeking concurrence from world leaders on agreeing on the current problem with “Frontier AI” that different countries are trying to tackle. There is momentum behind the idea that artificial intelligence needs more regulation and much closer oversight in all its forms. It’s evident that AI can improve people’s lives in healthcare, education and the economy, but it could also wreak havoc on the same level as a pandemic or nuclear war.

The real change, however, is to come in the following months. Governments are worried about what will happen next year when new, more powerful AI models emerge. Sunak also insisted that we must fully understand the risks before going to regulation. It is no secret that the prime minister wants tech companies to invest and develop their products in the UK, hoping for economic benefits. The plan for balancing the needs of regulation and innovation remains to be determined.

A new global hub based in the UK and tasked with testing the safety of emerging types of AI has been backed by leading AI companies and nations, as the world’s first AI Safety Institute launches on 2nd November. UK has agreed to two partnerships: with the US AI Safety Institute and the Government of Singapore to collaborate on AI safety testing – two of the world’s biggest AI powers.

There was very limited discussion on the impact of AI’s energy-intensive computing and data centres on the environment, which remains an open item towards addressing climate change.

What’s Next?

The world’s first AI Safety Institute, finally commissioned in the UK, is tasked with testing the safety of emerging types of AI. The Institute will carefully test new types of frontier AI before and after they are released to address the catastrophic harmful capabilities of AI models, including exploring all the risks; from social harms like bias and misinformation to the most unlikely but extreme risk, such as humanity losing control of AI completely.

In undertaking this research, the AI Safety Institute will look to work closely with the Alan Turing Institute as the national institute for data science and AI. The AI Safety Institute will have priority access to this cutting-edge supercomputer to help develop its research programme into the safety of frontier AI models and support the government with this analysis.

Over the coming weeks and months, we expect more governments and corporations to lay out plans to transform some of the aspirations into action. It’s also certain that the conference will be the first of many, while future conferences are planned to take place in France and South Korea soon.

AI has immense potential to do good, but to realise the benefits, our societies must be confident that risks & biases are being addressed while the evolution remains human-centric.