Improving Facial Emotion Recognition Using Pre-Trained Deep Learning Models

Human facial emotion recognition (FER) has gained popularity due to its promising results. The depiction of facial emotion image to its particular emotional state is the main task in FER. The traditional approach in FER includes the extraction of features and their recognition. However, the results were worthless. For the time being, deep learning, especially convolutional Neural Networks (CNNs), is widely used because of its capabilities and structure. However, the use of transfer learning inside the loop of deep learning makes it more capable for FER. Pre-trained models such as VGG-16, VGG-19, and ResNet-18.the suggested FER system has been demonstrated on eight different pre-trained DCNN (deep learning neural network) models, including VGG-16, VGG-19, ResNet-18, ResNet-34, ResNet-50, ResNet-152, Inception-v3 and DenseNet-161 on the renown KDEF facial images data set. This helped to improve and achieve higher accuracy on the data set of KDEF.

Introduction to Facial Expression Recognition

Recognizing facial expressions is catching fire, especially because of its use in psychology, forensic sciences, healthcare, and human-computer interaction [1,2,3]. Existing methods usually use trivial CNN, which has fear layers, whereas this methodology proposes a method that uses models such as VGG19, which uses a greater number of hidden layers; after that, their fine tuning makes it more impactive. This methodology, which is based on TL, saves computational power and time by abstaining its training from scratch on such a large dataset. Also, this methodology has been tested on both frontal and profile views of KDEF [4,5,6,7,8,9,10].

About Data Set

KDEF (Karolinska Directed Emotional Faces) is a widely used dataset for research in facial emotion recognition. It contains 4900 images of 70 persons. Half of them are females, and the other half are males. There are seven universal facial expressions: happy, sad, surprised, angry, disgusted, afraid, and neutral. All expressions are shown at 5 different viewing angles (0°, 45°, 90°, 135° and 180°). Each and every image is black and white with a resolution of 256×256 pixels.

Figure 1: Sample images from the KDEF dataset.

Methodology for Conventional FER:

The trivial methods include two separate steps, i.e., feature extraction from facial images like geometric features and then classifying them using labels of facial emotion state. A number of research studies have been done to compare the results of existing FER approaches and DCNN approaches [11,12]. The next sections will briefly explain the contribution of TL in FER.

Machine learning based Approach for FER:

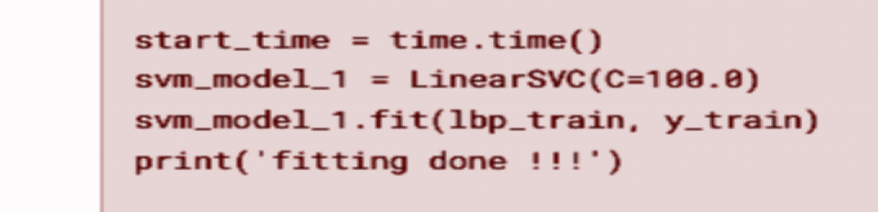

FER is a tricky and challenging task in the AI domain, especially if machine learning approaches are used. Different research studies have been done that explicitly show the accuracy of models based on machine learning and completely highlight that Deep Learning models outperformed every time in FER. For instance, KNN is used after feature extraction. SVM(support vector machine) is used with several feature representations like DWT. In this case, we used linear SVC for classification. It forms a linear boundary to classify different classes of emotions. During training, Linear SVM finds the hyperplane, which maximizes the distance between positive and negative gutters and finds the decision boundary in such a way that successfully separates all classes. Despite its excellent features, this model fails to perform efficiently on KDEF. There are several reasons for its underscoring results. This model is limited to only linear relations, and as emotions do not occupy linear relations, they cease to function correctly. Secondly, it cannot recognize the complex patterns inside the images. Consequently, this model causes underfit for the data set. A sample has been attached as evidence.

Figure 2: Sample code on KDEF dataset by using Linear SVM.

And the output is:

Figure 3: Output of above code on KDEF dataset by using Linear SVM.

Beyond the shadow of a doubt, this model is not a fit for KDEF. However, many other models, such as KNN, Fisher’s Linear Discriminant, Radial Base function, LDA, Logistic Regression, Naive Bayes, and Regression Trees, have been tried. All of them lead to only one result, giving a very low accuracy. While ignoring the profile view, the frontal view is the nucleus of all these methods for FER that leads to biased results. All these affirmations make the traditional machine learning approach for FER fail to work effectively [14,15,16].

Deep learning based Approach for FER:

This approach is modern for facial emotion recognition (FER); moreover, the results are more promising. In this regard, many studies have been reported in the literature. Zhao and Zhang [7] amalgamated Deep Belief Network(DBN) with Neural Network(NN) for feature learning, whereas DBN is used for unsupervised feature learning and NN is used for classification of emotions. Pranav et al. [13] contemplated standard CNN architectonics with two convolutional layers for the FER facial emotion data set. Ding et al. [17] outstretched deep face recognition to get higher accuracy for FER and applied an architecture known as FaceNet2ExpNet. Shaees et al. [18] have scrutinized a model with transfer learning TL, a pre-trained model, where AexNet is classified with the help of SVM.

Liliana [19] exploited the Deep CNN model with 18 convolutional layers and 4 subsampling layers for FER.

These methods make use of frontal view images only; nevertheless, many researchers neglected profile view to make tasks more easy for them.

Overview of CNN, Deep CNN Models, and Transfer Learning (TL):

Our aim is to find the best-suited DCNN model for FER using TL. We conducted an experiment to find the most suitable pre-trained model for FER.

Overview of Convolutional Neural Network(CNN):

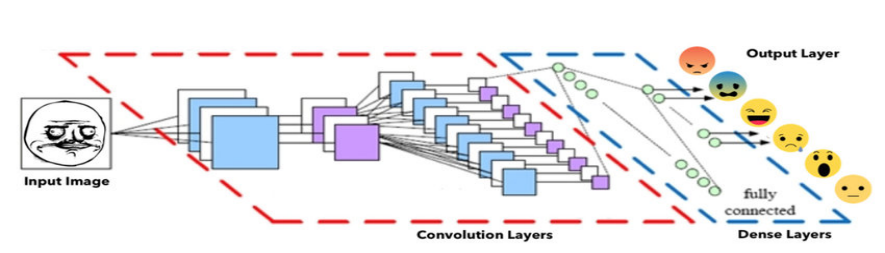

Figure 4: Basic CNN structure consists of Convolutional Layers and dense Layers

Because of the intrinsic construction and structure of CNN, it is best for the image processing domain [25]. It consists of an input layer, a convolutional-pooling hidden layer, and an output layer.

The convolutional layer uses a small kernel to recognize the patterns. Then, it recruits a pooling layer to reduce the size and form downsampling.

Standard CNN, as illustrated in Figure 5, implies a consecutive design. Convolutional layers extract the features, and as discussed earlier, pooling layers reduce the size. Furthermore, fully connected layers help in final decision-making. In the end, the loss layer measures the error during training. This discussion briefly explains why CNN can be more helpful for FER instead of traditional approaches [4,24].

Figure 5: The generic architecture of a convolutional neural network with two convolutional-pooling layers.

DCNN models and TL Motivation:

DCNN contains a huge number of hidden layers that take in higher-dimension images, ultimately making it challenging for models to be trained. However, different DCNN models have emerged and been utilized over the past few decades. The first ever model with good precision was AlexNet, which has 5 convolutional layers and was trained on 15 million images [20]. ZFNET is highly similar to AlexNet, has the equivalent precision but with fewer parameters, and reduces the kernel [21].VGG-16 and VGG-19, with 16 and 19 hidden layers, respectively, are deeper CNN models, eventually improving the results [22]. DenseNet-161 has 157 hidden layers to improve the flow of information and results as well [23].

Besides the performance of these models, training is still a critical part. It may need huge computational power and resources. Due to the limited data availability and resources, Transfer Learning becomes an ideal solution for compensating for these robust challenges.

Facial Emotion Recognition (FER) Using TL in Deep CNNs:

Training the model from scratch is computationally expensive, so re-using the available trained model using transfer learning has been the best choice so far. The first layer recognizes primary features, such as corners and edges, while the next layer detects more complex features, such as texture and shape.

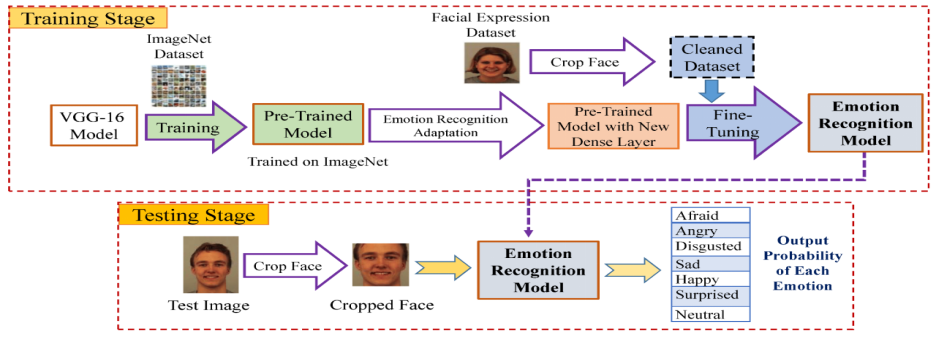

Figure 6: Illustration of the proposed FER system based on transfer learning in deep CNN.

As per our understanding, training the DCNN is expensive, so that we will use a pre-pre-trained model such as VGG-16 pre-trained with a large dataset such as Image Net for FER [22].

After choosing the pre-trained model, add Dense Layers, i.e., fully connected layers, after the pre-trained model for emotion classification. Perform Fine-tuning and train the only newly added layers on the dataset of emotions. We used Adam Optimizer for efficient training. To make data Processing even smoother and faster, employ data augmentation and resize the facial regions. It results in enhanced and increased accuracy. Manipulating a pre-trained model makes the task easy and saves time. This supple approach helps in fine-tuning, which lowers the chances of risk and gets better results. Meanwhile, the use of data augmentation reduces the chances for the model to become overfit.

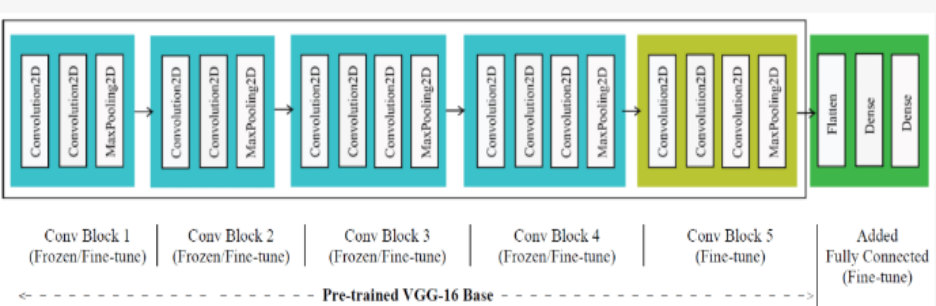

Figure 7: Illustration of the proposed Emotion Recognition model with VGG-16 model plus dense (i.e., fully connected) layers. The VGG-16 model is pre-trained on the ImageNet dataset. A block with a ‘Fine-tune’ mark is essential to fine-tune, and a block.

Experimental Studies:

OpenCV is used for cropping the sizes of images. The images were resized into 224×224 size, which is the default size of the pre-trained model for input of all DCNN models. Adam optimizer consists of a learning rate of 0.0005, beta1: 0.9 and beta2: 0.009. Only a small amount of augmentation has been applied carefully.

Separating training and testing data sets with a testing size of 0.1, 90% has been reserved for training, and 10-fold Validation has been exploited to get higher accuracy. By using TensorFlow at the backend, we trained models in Python with the help of Keras [26].

Experimental Results and Analysis:

This section involves the results we obtained by employing different techniques and different pre-trained DCNN models on the KDEF dataset.

Table 1 represents the test set accuracies of standard CNN. It has 2 layers with a 3×3 size kernel and 2×2 Maxpoling for several input sizes to 48×48 for the KDEF dataset. This result is the best test score for 50 iterations. It has been demonstrated that the larger the input size, the better the results. For instance, the same model on the same dataset of KDEF gives 73.38% accuracy for an input size of 360×360, whereas 61.63% for a 48×48 size.

Table 1: Test set accuracies of standard CNN with two layers on KDEF for different input-size images.

| Input Image Size | KDEF |

| 360 x 360 | 73.87% |

| 224 x 224 | 73.46% |

| 128 x 128 | 80.81% |

| 64 x 64 | 69.39% |

| 48 x 48 | 61.63% |

As fine-tuning is the basic necessity of TL, we experimented by utilizing different pre-trained DCNN models. Table 2 represents test accuracies of different modes of training the VGG-16 model by choosing different parameters. The total number of iterations kept the same as in standard CNN. From the table, it is clear that when a dense layer with a complete VGG-16 base is experimented on, the results are near perfection.

Table 2: Comparison of test set accuracies with VGG-16 for different training modes in fine-tuning.

| Training Mode | KDEF |

| Dense layer Only | 77.55% |

| Dense LAyers + VGG-16 Block 5 | 91.83% |

| Entire Model(Dense Layer + VGG-16 Base) | 93.47% |

| Whole Model from Scratch | 23.35% |

This same approach has been applied to eight different pre-trained models of DCNN: VGG-16, VGG-19-BN, ResNet-18, ResNet-34, ResNet-50, ResNet-152, Inception-v3, and DenseNet-161.

The number with the model name represents the number of hidden layers inside it. Again, we used the same method for testing size. The accuracy for KDEF ranges between 93.47% to 98.78%. Table 3 shows the results of different models.

Table 3: Comparison of the test set accuracies with different pre-trained deep CNN models on KDEF datasets.

| Pre-trained DCNN Models | KDEF in 10% Test Sample |

| VGG-16 | 93.47% |

| VGG-19 | 96.73% |

| ResNet-18 | 94.29% |

| ResNet-34 | 96.33% |

| ResNet-50ResNet-152 | 97.55%96.73% |

| Inception-v3 | 97.55% |

| DenseNet-161 | 98.78% |

The noticeable point is that the deeper the model, the better the performance is. Among these models, the deepest one is DenseNet-161, and it outperforms without any uncertainty.

In the 10-fold CV, only 6 images were misclassified by the model from 490 test samples. Table 4 represents the misclassification category-wise. Three images of fear were classified as surprised, whereas 2 images of surprise were classified as afraid, and one image was classified as disgust, whereas it belonged to sadness.

Table 4: Classification of each emotion class of the KDEF dataset.

| (AF) | (AN) | (DI) | (HA) | (NA) | (SA) | (SU) | |

| Afraid (AF) | 67 | 0 | 0 | 0 | 0 | 0 | 3 |

| Angry(AN) | 0 | 70 | 0 | 0 | 0 | 0 | 0 |

| Disgusted(DI) | 0 | 0 | 70 | 0 | 0 | 0 | 0 |

| Happy(HA) | 0 | 0 | 0 | 70 | 0 | 0 | 0 |

| Neutral(NA) | 0 | 0 | 0 | 0 | 70 | 0 | 0 |

| Sad(SA) | 0 | 0 | 1 | 0 | 0 | 69 | 0 |

| Surprised (SU) | 2 | 0 | 0 | 0 | 0 | 0 | 68 |

Conclusion:

The exploration of facial emotion recognition (FER) using transfer learning and deep convolutional neural networks (CNNs) represents a significant stride in the domain of artificial intelligence, particularly in understanding and interpreting human emotions through technology. This article meticulously outlines the advancements in FER methodologies, emphasizing the role of pre-trained deep learning models in improving the efficiency and accuracy of emotion detection systems. The utilization of renowned datasets such as the Karolinska Directed Emotional Faces (KDEF) and the application of sophisticated models like VGG-16, VGG-19, ResNet series, Inception-v3, and DenseNet-161 underscore the potential of transfer learning in transcending traditional computational limitations and achieving remarkable outcomes in FER. The core of this breakthrough lies in the strategic employment of transfer learning to leverage the rich feature-detection capabilities of models pre-trained on vast image datasets. By fine-tuning these models to recognize specific emotional expressions, researchers have significantly reduced the need for extensive computational resources and time-consuming training processes. This approach not only conserves valuable resources but also ensures that the models are highly adaptable and capable of handling the nuanced variations in human facial expressions across different demographics and contexts.

This study proposes the methodology by using the DCNN model and TL. It uses a pre-trained model such as VGG-16 because of less computational power and to save time. Gradually fine-tuning the layers significantly improves the results. In the future, its application may be extended, such as implementing this methodology on patient monitoring, etc. However, this study illustrates the effectiveness of DCNN by using TL for FER. Experimental results have convincingly demonstrated the superiority of deep learning models, particularly when enhanced through transfer learning, over conventional machine learning techniques in the realm of FER. These findings are evidenced by the high accuracy rates achieved on the KDEF dataset, showcasing the effectiveness of deep CNNs in identifying a wide range of emotions from facial images. Notably, the deeper the model, such as DenseNet-161, the better its performance, highlighting the critical role of model complexity in capturing the intricate details necessary for accurate emotion recognition.

Furthermore, the article emphasizes the broad applicability and potential impact of these advancements across various fields, including psychology, healthcare, forensic science, and human-computer interaction. The ability to accurately interpret human emotions through technology paves the way for more empathetic and responsive computing, enhancing the interaction between humans and machines. This could revolutionize user experience by enabling devices to adjust their responses based on the user’s emotional state, thereby making technology more intuitive and aligned with human needs.

In summary, the integration of transfer learning with deep CNNs in FER marks a pivotal development in artificial intelligence, offering a powerful tool for understanding and interacting with the emotional dimensions of human behavior. The success of this approach not only validates the effectiveness of leveraging pre-trained models for specialized tasks but also opens new avenues for research and application, promising to extend the benefits of this technology to various sectors. As we move forward, the continued refinement and application of these methodologies hold the promise of further bridging the gap between human emotions and computational understanding, enriching the interaction between humans and the digital world.

References:

- Ekman, P.; Friesen, W.V. Measuring facial movement. Environ. Psychol. Nonverbal Behav. 1976, 1, 56–75. [Google Scholar] [CrossRef]

- Ekman, P. Universal Facial Expressions of Emotion. Calif. Ment. Health 1970, 8, 151–158. [Google Scholar]

- Suchitra, P.S.; Tripathi, S. Real-time emotion recognition from facial images using Raspberry Pi II. In Proceedings of the 2016 3rd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 11–12 February 2016; pp. 666–670. [Google Scholar] [CrossRef]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.S.; Asari, VK A State-of-the-Art Survey on Deep Learning Theory and Architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef] [Green Version]

- Sahu, M.; Dash, R. A Survey on Deep Learning: Convolution Neural Network (CNN). In Smart Innovation, Systems and Technologies; Springer: Singapore, 2021; Volume 153, pp. 317–325. [Google Scholar]

- Mollahosseini, A.; Chan, D.; Mahoor, M.H. Going deeper in facial expression recognition using deep neural networks. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Shi, X.; Zhang, S. Facial Expression Recognition via Deep Learning. IETE Tech. Rev. 2015, 32, 347–355. [Google Scholar] [CrossRef]

- Li, J.; Huang, S.; Zhang, X.; Fu, X.; Chang, C.-C.; Tang, Z.; Luo, Z. Facial Expression Recognition by Transfer Learning for Small Datasets. In Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2020; Volume 895, pp. 756–770. [Google Scholar]

- Bendjillali, R.I.; Beladgham, M.; Merit, K.; Taleb-Ahmed, A. Improved Facial Expression Recognition Based on DWT Feature for Deep CNN. Electronics 2019, 8, 324. [Google Scholar] [CrossRef] [Green Version]

- Ngoc, Q.T.; Lee, S.; Song, B.C. Facial Landmark-Based Emotion Recognition via Directed Graph Neural Network. Electronics 2020, 9, 764. [Google Scholar] [CrossRef]

- Huang, Y.; Chen, F.; Lv, S.; Wang, X. Facial Expression Recognition: A Survey. Symmetry 2019, 11, 1189. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Deng, W. Deep Facial Expression Recognition: A Survey. IEEE Trans. Affect. Comput. 2020. [Google Scholar] [CrossRef] [Green Version]

- Pranav, E.; Kamal, S.; Chandran, C.S.; Supriya, M. Facial emotion recognition using deep convolutional neural network. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 317–320. [Google Scholar] [CrossRef]

- Shih, F.Y.; Chuang, C.-F.; Wang, P.S.P. Performance comparisons of facial expression recognition in JAFFE database. Int. J. Pattern Recognit. Artif. Intell. 2008, 22, 445–459. [Google Scholar] [CrossRef]

- Shan, C.; Gong, S.; McOwan, P.W. Facial expression recognition based on Local Binary Patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef] [Green Version]

- Jabid, T.; Kabir, H.; Chae, O. Robust Facial Expression Recognition Based on Local Directional Pattern. ETRI J. 2010, 32, 784–794. [Google Scholar] [CrossRef]

- Ding, H.; Zhou, S.K.; Chellappa, R. FaceNet2ExpNet: Regularizing a deep face recognition net for expression recognition. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 118–126. [Google Scholar] [CrossRef] [Green Version]

- Shaees, S.; Naeem, H.; Arslan, M.; Naeem, M.R.; Ali, S.H.; Aldabbas, H. Facial Emotion Recognition Using Transfer Learning. In Proceedings of the 2020 International Conference on Computing and Information Technology (ICCIT-1441), Tabuk, Saudi Arabia, 9–10 September 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Liliana, D.Y. Emotion recognition from facial expression using deep convolutional neural network. J. Phys. Conf. Ser. 2019, 1193, 012004. [Google Scholar] [CrossRef] [Green Version]

- Antonellis, G.; Gavras, A.G.; Panagiotou, M.; Kutter, B.L.; Guerrini, G.; Sander, A.C.; Fox, P.J. Shake Table Test of Large-Scale Bridge Columns Supported on Rocking Shallow Foundations. J. Geotech. Geoenviron. Eng. 2015, 141, 04015009. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In Proceedings of the European Conference on Computer Vision (ECCV 2014), Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Akhand, MAH; Ahmed, M.; Rahman, M.M.H.; Islam, M. Convolutional Neural Network Training incorporating Rotation-Based Generated Patterns and Handwritten Numeral Recognition of Major Indian Scripts. IETE J. Res. 2018, 64, 176–194. [Google Scholar] [CrossRef] [Green Version]

- Sahu, M.; Dash, R. A Survey on Deep Learning: Convolution Neural Network (CNN). In Smart Innovation, Systems and Technologies; Springer: Singapore, 2021; Volume 153, pp. 317–325. [Google Scholar]

- François, C. Keras: The Python Deep Learning Library. Available online: https://keras.io (accessed on 15 November 2020).